10.6 Optional: Effect Size

Statistics is powerful at detecting when two numbers are different, even if they are very close together. For example, we have a population of numbers with mean and suppose that a hypothesis test is to be conducted on the following:

Suppose that is false, and that simple random sampling is conducted on the population. If the sample size is large enough, then with high reliability will be rejected, no matter how close together and might be. And, if the difference between and is small relative to the population standard deviation then that difference may not be of importance. That is, while results from a test may be significant, the distinction may by unimportant.

For example, suppose that a golfer wants to test, when using her driver, whether her average drive off the tee is different from 240 yards. She could generate a sample of drives off the tee, carefully measuring the distance of each. If denotes her true mean driving distance, then the sample could be used to test the hypotheses:

Suppose that is false, and that i.e., her true mean drive is about 1 inch longer than 240 yards. While 240.03 yards is definitely not equal 240 yards, is that an important difference? No. But, if she were to select a sample size that is sufficiently large, it is highly likely the statistical test would yield a significant result, i.e., a small -value.

How do we tell when a significant result is important? For example, when is the difference between 240.03 and 240 important? In this case, unless the golfer is freakishly consistent, the difference of 1 inch is small relative to the standard deviation of the drive lengths. That is, the question on whether a significant result is important is made by considering the difference relative the standard deviation within the population. Thus, if a result is significant, to measure the importance can done via Cohen’s d, which is the ratio

| (10.7) |

The smaller the ratio, the more that the significance is due to a large sample size; the larger the ratio, the more important the difference. In statistics, this is called measuring the effect size, which simply means estimating the degree to which the significant result is mostly due to a large sample (small effect), or that the difference is important (large effect).

| (10.8) |

As it mimics the ratio in Equation 10.7, note that the ratio in Equation 10.8 is not especially sensitive to sample size. In Equation 10.8, if is known, then use it instead of

A couple of simulations will help illustrate.

Example 10.6.1.

The purpose of the example is to show that statistical tests, such as the -test, can detect very small differences, and to show what Cohen’s does in this situation. Let’s use the golfer scenario above, and assume that the the true mean driving distance is yards, i.e., yards. To demonstrate the sensitivity of the -test further, suppose that the difference is small relative to For this purpose, let’s assume that yard.

To detect such a small difference with a comparatively large we need a large sample size; ought to reliably work.

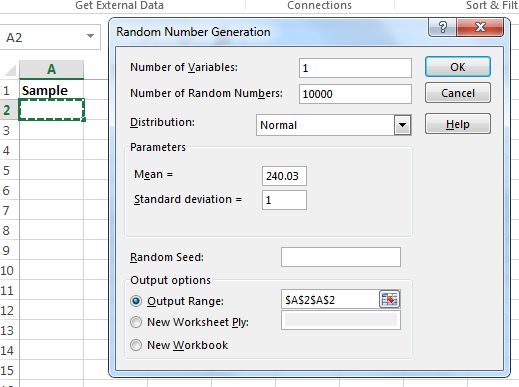

In Excel, use the Random Number Generator to generate the sample. Use for the mean, for the standard deviation, and for the number of random numbers, as shown in Figure 10.32.

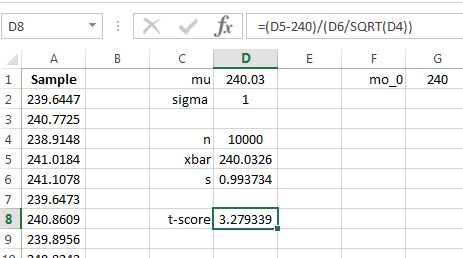

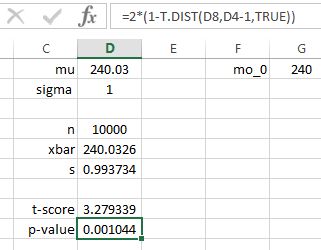

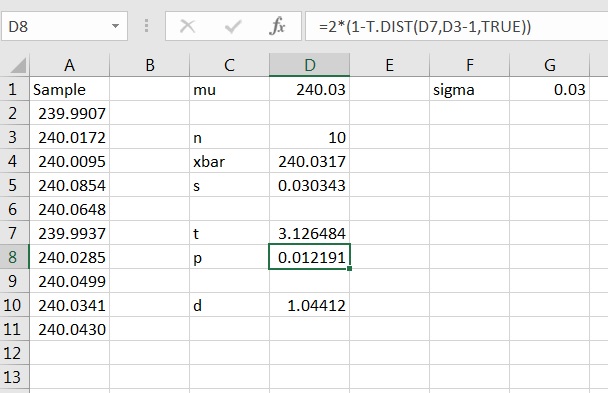

Compute the summary statistics for the sample, and then compute the test statistic, as shown in Figure 10.33.

The corresponding -value is shown in Figure 10.34. You are very likely to get a small -value. If you don’t, simulating again might do the trick. Note that in the simulation displayed, the result is significant, unless a very small value of was chosen.

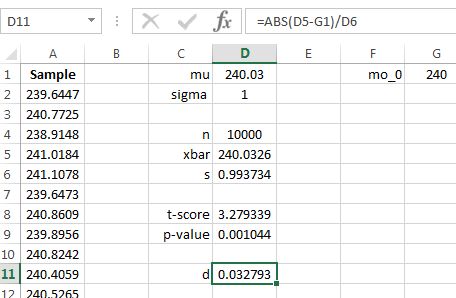

Yet, note the approximate value of Cohen’s 4646In this example, because we are in control of a simulation, the exact value of Cohen’s can be calculated. In this case, the exact value is 0.03. as shown in Figure 10.35.

The value is small, strongly suggesting that the significant result is due mainly to a large sample size, just as expected.

Example 10.6.2.

The purpose of this example is to illustrate Cohen’s when there is a large effect. We can do this using much of the setup in Example 10.6.1. Let us again set and but we want the difference to be a large effect. To do that, we ought to choose to be smaller, so that the difference is “large” relative to the standard deviation. To illustrate, let’s choose With this choice, the difference between and is 1 standard deviation, which we know to be a nontrivial distance in a population.

Since the effect is large, we ought to be able to detect it even with a small sample size, such as Repeat the simulation in Example 10.6.1, using and You likely see a result as shown in Figure 10.36.

Using Cohen’s

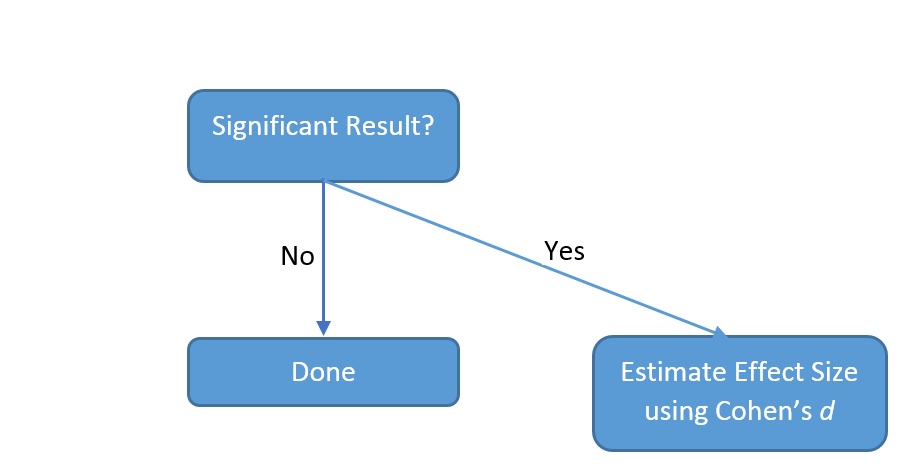

You should estimate the effect size only when you get a significant result, i.e., if you fail to reject then don’t use Cohen’s If a result is significant, then use Formula (10.8) on page 10.8 to approximate the effect size. This is illustrated by the flowchart in Figure 10.37.

Table 10.1 gives guidelines for interpreting values of Cohen’s

| Effect Size | |

|---|---|

| 0.2 | Small |

| 0.5 | Medium |

| 0.8 | Large |

Example 10.6.3.

A company has committed to purchase an industrial glue if there is strong evidence to support that the mean sealing strength, at a room temperature of 100 F, is greater than 20 lb/ Following are the sealing strengths, measured in lb/ of a sample of 10 tested at 100 F:

At a level of 5%, test whether the company should purchase the glue. If the null hypothesis is rejected, estimate the effect size.

Solution: From Example 10.5.1 on page 10.5.1, the -value is approximately 0.0166, and hence, is rejected. Thus, it is appropriate to estimate the effect size. From the calculations we have and and hence,

This implies a large effect size, i.e., the average sealing strength surpasses the company’s minimum expectation and the company should purchase the glue.